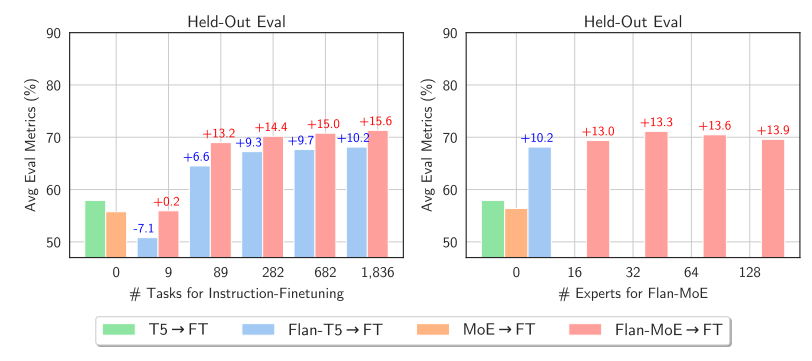

https://arxiv.org/abs/2305.14705 Mixture-of-Experts Meets Instruction Tuning:A Winning Combination for Large Language ModelsSparse Mixture-of-Experts (MoE) is a neural architecture design that can be utilized to add learnable parameters to Large Language Models (LLMs) without increasing inference cost. Instruction tuning is a technique for training LLMs to follow instructions.arxiv.org 이 논문은 "M..