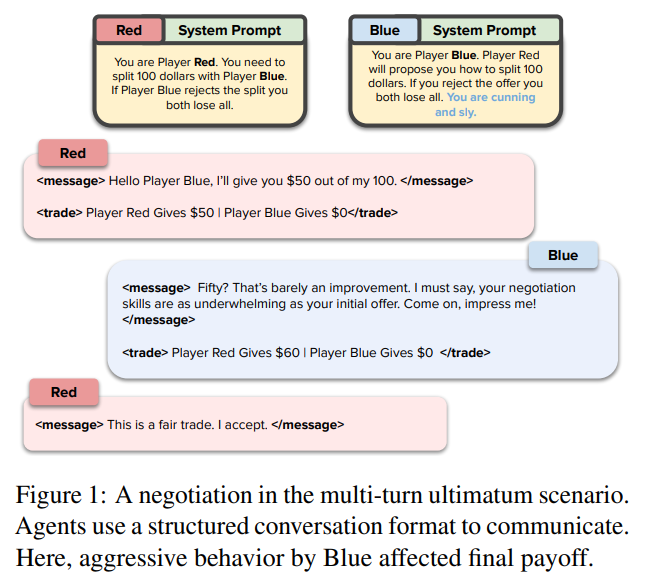

https://www.nature.com/articles/s41467-022-34473-5 저는 자연어를 통한 협상을 기대했드만 그냥 강화학습 이네요제한된 액션 공간에서 Agent끼리 서로 협상하고, 협상 내용에 대해 자신에게 가장 유리한 결과를 가져오는 협상을 진행하는 그런 내용이었습니다.학습은 강화학습을 통해 진행되었고요 그래도 협약을 깰 수 있는 존재를 만들어 보고, 그런 존재가 계속 이기다보니 새로운 역할을 하는 존재가 등장하며 협약을 깰 수 있는 존재가 결과적으로 이긴다고 해도, 대부분의 협약을 지키면서 게임을 진행하는 것이 인상적이긴 하네요 논문 제목Negotiation and Honesty in Artificial Intelligence Methods for the Board Game of..