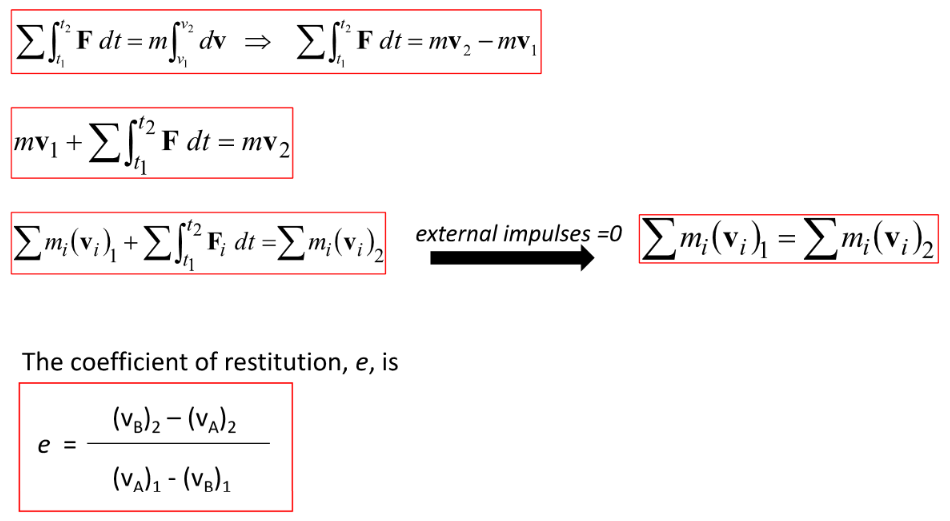

시험 전 마지막 진도가 충돌이었습니다.그래서 나왔던 식은 위와 같았습니다. 이제부턴 충돌에 각이 생기고, Line of impact만 생각해서 연산하면 됩니다. 이 문제에서 사이즈는 무시해도 됩니다.X축 충돌만 존재하므로 y축 속도는 변함 없고, mv + mv = mv + mv에 잘 넣어서 연산하고, e = (v - v) / (v - v)를 구하면 끝 입니다. 이제 각 운동량이 나옵니다.더보기운동량은 물체의 운동 상태를 나타내는 물리량으로, 크게 두 가지 종류가 있습니다: 선운동량(Linear Momentum)과 각운동량(Angular Momentum). 각각에 대해 자세히 설명하면 다음과 같습니다:1. 선운동량 (Linear Momentum)정의선운동량은 질량이 있는 물체가 일정한 속도로 운동할 때, ..