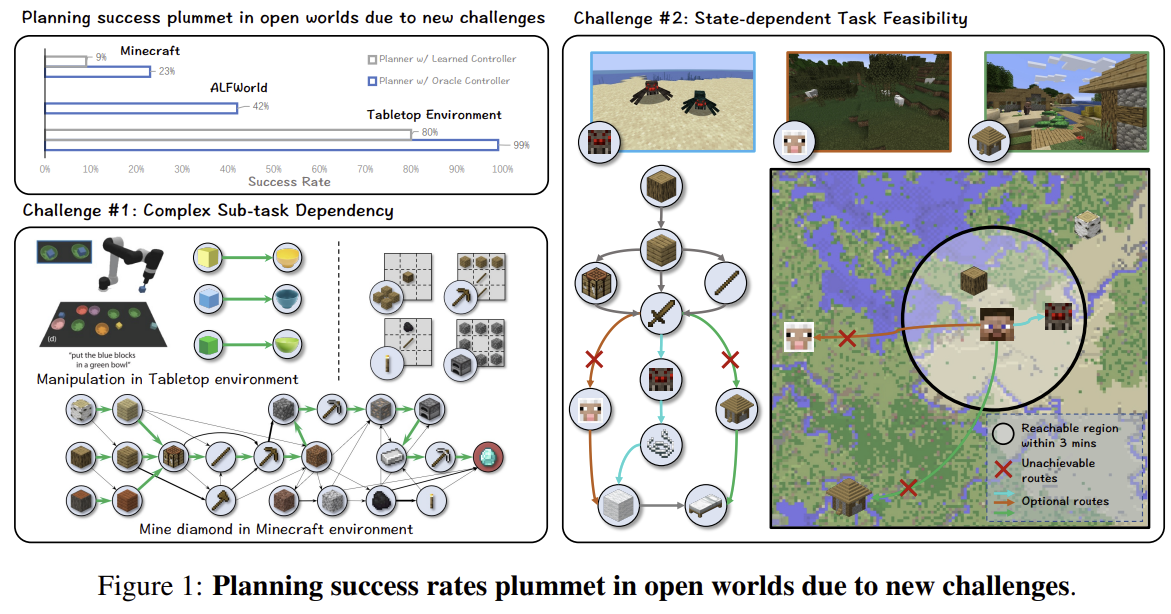

https://arxiv.org/abs/2311.05997 JARVIS-1: Open-World Multi-task Agents with Memory-Augmented Multimodal Language ModelsAchieving human-like planning and control with multimodal observations in an open world is a key milestone for more functional generalist agents. Existing approaches can handle certain long-horizon tasks in an open world. However, they still struggle whenarxiv.orgJARVIS-1은 멀티모달..