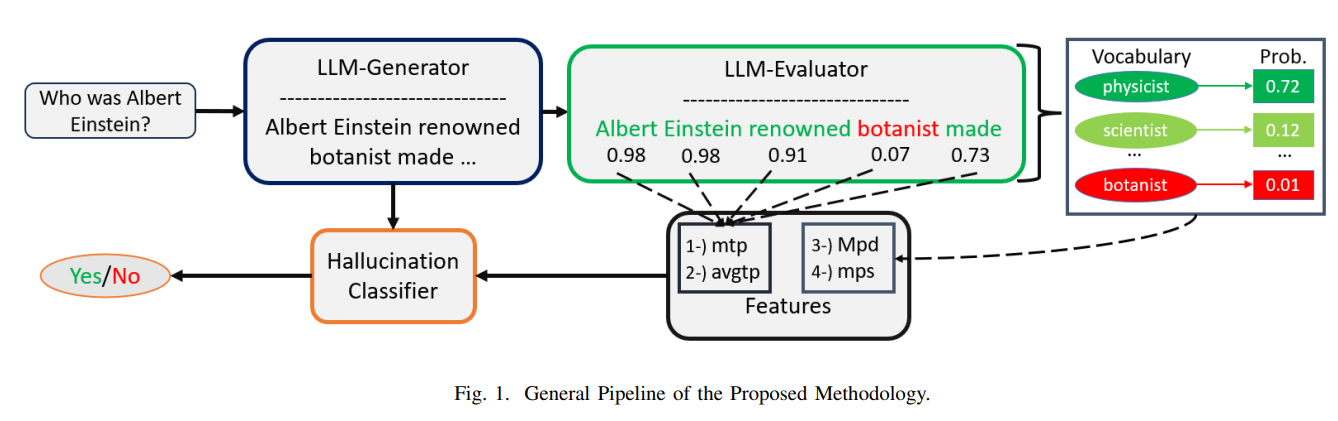

https://arxiv.org/abs/2310.06271 Towards Mitigating Hallucination in Large Language Models via Self-ReflectionLarge language models (LLMs) have shown promise for generative and knowledge-intensive tasks including question-answering (QA) tasks. However, the practical deployment still faces challenges, notably the issue of "hallucination", where models generate plauarxiv.org 그럴듯하게 들리지만 사실이 아니거나 터무..