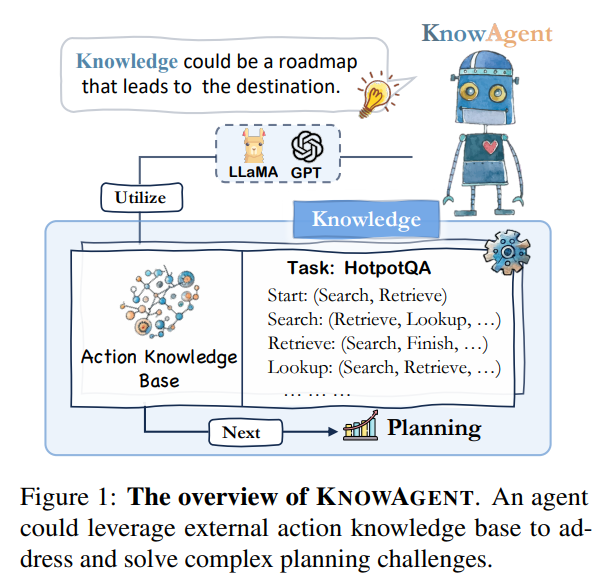

https://arxiv.org/abs/2403.03101 KnowAgent: Knowledge-Augmented Planning for LLM-Based AgentsLarge Language Models (LLMs) have demonstrated great potential in complex reasoning tasks, yet they fall short when tackling more sophisticated challenges, especially when interacting with environments through generating executable actions. This inadequacyarxiv.org https://zjunlp.github.io/project/KnowAg..