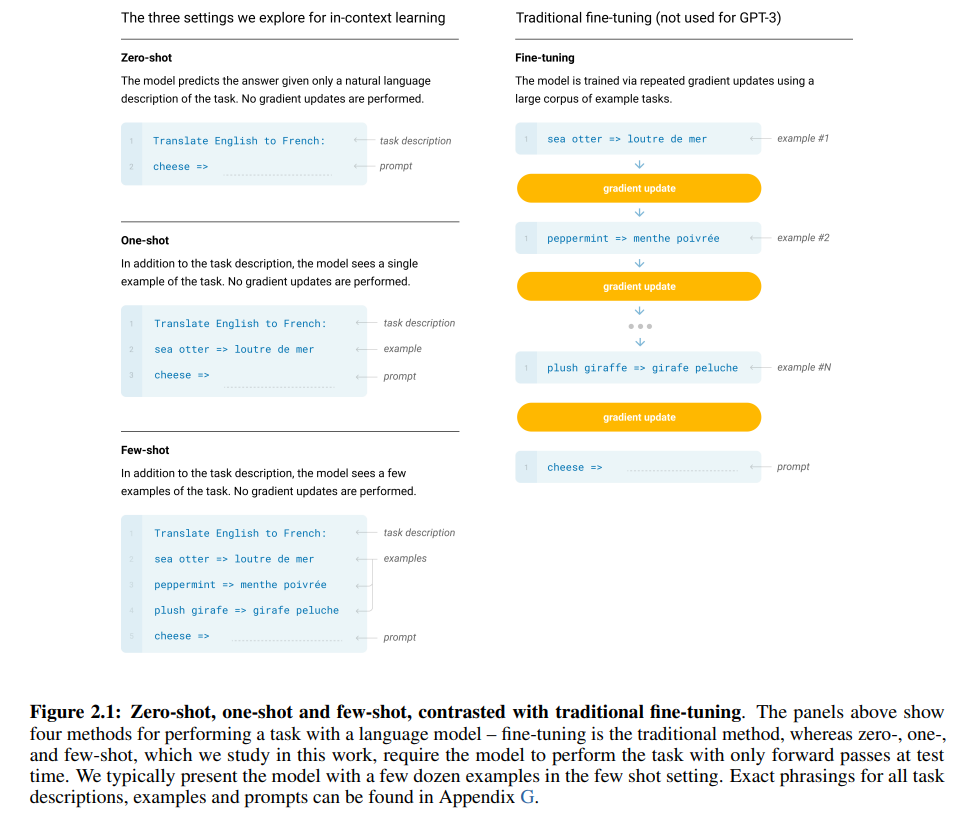

https://arxiv.org/abs/2005.14165 Language Models are Few-Shot LearnersRecent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task. While typically task-agnostic in architecture, this method still requires task-specific fiarxiv.orgFew-Shot은 이 그림으로 명확하게 설명이 가능하겠네요 파라미터의 변경 없이 Prompt에 몇 개의 예시만으로..