728x90

728x90

import numpy as np

import matplotlib.pyplot as plt

import scipy.io

# Setup the parameters you will use for this part of the exercise

input_layer_size = 400

num_labels = 10

from google.colab import drive

drive.mount('/content/drive')

# Given code

def displayData(X, example_width=None):

if example_width is None:

example_width = int(round(np.sqrt(X.shape[1])))

plt.set_cmap("gray")

m, n = X.shape

example_height = int(n / example_width)

display_rows = int(np.floor(np.sqrt(m)))

display_cols = int(np.ceil(m / display_rows))

pad = 1

display_array = - np.ones((pad + display_rows * (example_height + pad), pad + display_cols * (example_width + pad)))

curr_ex = 0

for j in range(display_rows):

for i in range(display_cols):

if curr_ex >= m:

break

max_val = np.max(np.abs(X[curr_ex, :]))

rows = pad + j * (example_height + pad) + np.array(range(example_height))

cols = pad + i * (example_width + pad) + np.array(range(example_width))

display_array[np.ix_(rows, cols)] = np.reshape(X[curr_ex, :], (example_height, example_width)) / max_val

curr_ex += 1

plt.imshow(display_array.T, clim=(-1, 1))

plt.axis('off')

plt.show()

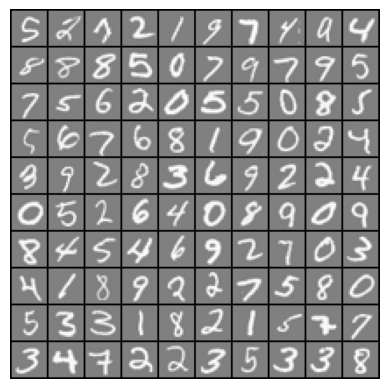

# ==================== Part 1: Loading and Visualizing Data ====================

# We start the exercise by first loading and visualizing the dataset.

# You will be working with a dataset that contains handwritten digits.

# Load Training Data

print('Loading and Visualizing Data ...')

mat_file_name = "/content/drive/MyDrive/Colab Notebooks/ex3data1.mat" # Write your path

mat_file = scipy.io.loadmat(mat_file_name)

X = mat_file['X']

X = np.array(X)

y = mat_file['y']

y = np.array(y)

m = X.shape[0]

# Randomly select 100 data points to display

rand_indices = np.random.permutation(m)

sel = X[rand_indices[0:100], :]

displayData(sel)

# ============ Part 2a: Vectorize Logistic Regression ============

# In this part of the exercise, you will reuse your logistic regression

# code from the last exercise. You task here is to make sure that your

# regularized logistic regression implementation is vectorized. After

# that, you will implement one-vs-all classification for the handwritten

# digit dataset.

# Test case for lrCostFunction

print('Testing lrCostFunction() with regularization\n')

theta_t = np.array([[-2], [-1], [1], [2]])

X_t = np.hstack([np.ones((5, 1)), np.reshape(np.arange(1, 16), (3, 5)).T / 10])

y_t = np.array([1, 0, 1, 0, 1]).reshape(-1,1)

lambda_t = 3

def sigmoid(z):

g = 1 / (1 + np.exp(-z))

return g

def lrCostFunction(theta, X, y, lambda_1):

# YOUR CODE HERE

m = len(y)

h = sigmoid(X@theta)

J= (-1/m) *(y.T@np.log(h)+(1-y).T@np.log(1-h)) + lambda_1/2/m*((np.sum(theta*theta)) -theta[0]*theta[0])

grad = (1/m*(X.T@(h-y))) + lambda_1/m*theta

grad[0] = grad[0] - (lambda_1/m) * theta[0]

return J, grad

J, grad = lrCostFunction(theta_t, X_t, y_t, lambda_t)

print('Cost: ', J);

print('Expected cost: 2.534819\n');

print('Gradients: ');

print(grad.reshape(-1,1));

print('\nExpected gradients:');

print(' 0.146561\n -0.548558\n 0.724722\n 1.398003\n');

Testing lrCostFunction() with regularization Cost: [[2.5348194]] Expected cost: 2.534819 Gradients: [[ 0.14656137] [-0.54855841] [ 0.72472227] [ 1.39800296]] Expected gradients: 0.146561 -0.548558 0.724722 1.398003

# ============ Part 2b: One-vs-All Training ============

from scipy.optimize import minimize

print('Training One-vs-All Logistic Regression...')

lambda_2 = 0.1

#y=y.flatten()""

def oneVsAll(X, y, num_labels, lambda_):

m, n = X.shape

all_theta = np.zeros((num_labels,n+1))

all_cost= np.zeros((num_labels,1))

print(X.shape)

X_1 = np.hstack((np.ones((m,1)),X))

print(X_1.shape)

for i in range(num_labels):

initial_theta = np.random.uniform(-0.01, 0.01, n+1)

initial_theta = initial_theta.ravel()

y=y.flatten()

result = minimize(fun = lrCostFunction, x0 = initial_theta, args=(X_1,(y==i+1).astype(int),lambda_),

method='CG',jac =True,options = {'maxiter':150,'gtol': 1e-15, 'eps': 1e-15})

all_theta[i, :] = result.x

return all_theta

all_theta = oneVsAll(X, y, num_labels, lambda_2);

## ================ Part 3: Predict for One-Vs-All ================

def predictOneVsAll(all_theta, X):

m = X.shape[0]

X_1 = np.hstack((np.ones((m, 1)), X))

htheta = X_1.dot(all_theta.T)

p = np.argmax(htheta, axis=1)+1

return p

pred = predictOneVsAll(all_theta, X);

y = y.reshape(-1)

print('Training Set Accuracy: ', np.mean(pred == y) * 100);

Training Set Accuracy: 96.32

728x90

'인공지능 > 공부' 카테고리의 다른 글

| FCN quiz (0) | 2023.11.16 |

|---|---|

| FCN - tensorflow (0) | 2023.11.15 |

| Logistic regression + regularized (1) | 2023.11.14 |

| logistic Regression (0) | 2023.11.13 |

| Linear regression 1 (0) | 2023.11.13 |