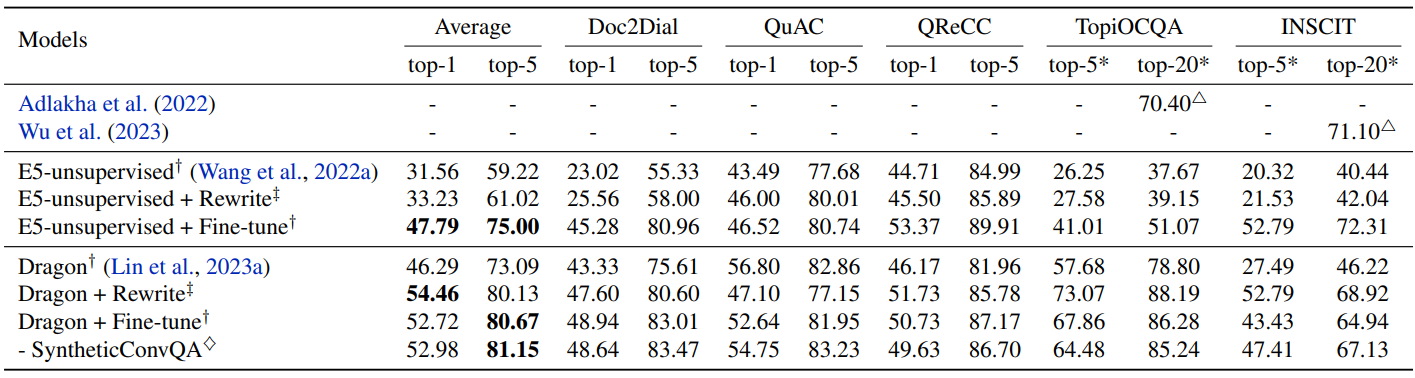

2025.03.17 - [인공지능/논문 리뷰 or 진행] - Embedding + Generation Model 사전 논문 조사5 - 데이터 셋 및 평가 데이터 정리 Embedding + Generation Model 사전 논문 조사5 - 데이터 셋 및 평가 데이터 정리2024.12.23 - [인공지능/논문 리뷰 or 진행] - ChatQA: Surpassing GPT-4 on Conversational QA and RAG - 논문 리뷰 ChatQA: Surpassing GPT-4 on Conversational QA and RAG - 논문 리뷰https://arxiv.org/abs/2401.10225 ChatQA: Surpassing GPT-4 onyoonschallenge.tistory.com여기서 ..