일단 저는 driving scenario tool box와 reinforcement learning에 대한 정보가 부족하기 때문에 그것에 대한 정보를 번갈아서 넣어 줄 예정입니다.

url을 하나씩 남기면서 모아볼게요...

아직 웹 크롤링은 잘 모르겠더라고여....

https://kr.mathworks.com/help/driving/ref/drivingscenariodesigner-app.html

Design driving scenarios, configure sensors, and generate synthetic data - MATLAB - MathWorks 한국

Starting in R2018b, in the Camera Settings group of the Driving Scenario Designer app, the Image Width and Image Height parameters set their expected values. Previously, Image Width set the height of images produced by the camera, and Image Height set the

kr.mathworks.com

Train Reinforcement Learning Agent in MDP Environment - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/scenarioreader.html

Read driving scenario into model - Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

Train Reinforcement Learning Agent in Basic Grid World - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

드라이빙 툴 박스의 내용이 강화학습 보다 더 많아서 강화학습 2개에 드라이빙 1개씩 넣어주겠습니다.

Create Simulink Environment and Train Agent - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/roadrunnerscenarioreader.html

Reads selected topic from RoadRunner scenario - Simulink

Starting in R2023a, MapLocation and its bus definition BusVehicleMaplocation are not supported, when you access Vehicle Pose using a RoadRunner Scenario Reader block. To read an actor location, use the new dedicated topic Actor Lane Location. The change al

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ug/design-dqn-using-rl-designer.html

Design and Train Agent Using Reinforcement Learning Designer - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ug/what-is-reinforcement-learning.html

What Is Reinforcement Learning? - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/roadrunnerscenariowriter.html

Write selected topic to RoadRunner scenario - Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ug/create-custom-simulink-environments.html

Create Custom Simulink Environments - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/roadrunnerscenario.html

Define interface for Simulink actor model - Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ref/rlsimulinkenv.html

Create environment object from a Simulink model already containing agent and environment - MATLAB rlSimulinkEnv

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ug/trajectory-follower-with-roadrunner-scenario.html

Trajectory Follower with RoadRunner Scenario - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ref/createintegratedenv.html

Create environment object from a Simulink environment model that does not contain an agent block - MATLAB createIntegratedEnv

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/scenariosimulation.html

Create, access, and control scenario simulation - MATLAB

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/roadrunner.openscenario.html

Open scenario in RoadRunner Scenario using MATLAB - MATLAB openScenario

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

Obtain observation data specifications from reinforcement learning environment, agent, or experience buffer - MATLAB getObservat

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/roadrunner.html

Start RoadRunner application using MATLAB - MATLAB

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ref/rl.env.basicgridworld.getactioninfo.html

Obtain action data specifications from reinforcement learning environment, agent, or experience buffer - MATLAB getActionInfo

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ug/define-reward-and-observation-signals.html

Define Reward and Observation Signals in Custom Environments - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/ultrasonicdetectiongenerator-system-object.html

Generate ultrasonic range detections in driving scenario or RoadRunner Scenario - MATLAB

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ug/train-reinforcement-learning-agents.html

Train Reinforcement Learning Agents - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/drivingradardatagenerator-system-object.html

Generate radar sensor detections or track reports from driving scenario or RoadRunner Scenario - MATLAB

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ref/generaterewardfunction.html

Generate a reward function from control specifications to train a reinforcement learning agent - MATLAB generateRewardFunction

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/lidarpointcloudgenerator-system-object.html

Generate lidar point cloud data for driving scenario or RoadRunner Scenario - MATLAB

You can now create a lidarPointCloudGenerator system object with sensor properties and then get the point cloud measurements without any manual actor pose inputs. First, you can now use the addSensors object function of the drivingScenario object to regist

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ref/generaterewardfunction.html

Generate a reward function from control specifications to train a reinforcement learning agent - MATLAB generateRewardFunction

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

Generate Reward Function from a Model Verification Block for a Water Tank System - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/visiondetectiongenerator-system-object.html

Generate vision detections for driving scenario or RoadRunner Scenario - MATLAB

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ug/ddpg-agents.html

Deep Deterministic Policy Gradient (DDPG) Agents - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/visiondetectiongenerator-system-object.html

Generate vision detections for driving scenario or RoadRunner Scenario - MATLAB

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/driving/ref/objectdetection.html

Report for single object detection - MATLAB

When passing an objectDetection object to a tracker, the ObjectAttributes property must be specified as a scalar structure or a cell containing a scalar structure.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ref/rl.option.rlddpgagentoptions.html

Options for DDPG agent - MATLAB

The properties defining the probability distribution of the Ornstein-Uhlenbeck (OU) noise model have been renamed. DDPG agents use OU noise for exploration. The Variance property has been renamed StandardDeviation.The VarianceDecayRate property has been re

www.mathworks.com

https://www.mathworks.com/help/vdynblks/ref/vehiclebody3dof.html

3DOF rigid vehicle body to calculate longitudinal, lateral, and yaw motion - Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ug/proximal-policy-optimization-agents.html

Proximal Policy Optimization (PPO) Agents - MATLAB & Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ref/rl.agent.rlppoagent.html

Proximal policy optimization (PPO) reinforcement learning agent - MATLAB

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/vdynblks/ref/vehiclebody3doflongitudinal.html

3DOF rigid vehicle body to calculate longitudinal, vertical, and pitch motion - Simulink

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

https://www.mathworks.com/help/reinforcement-learning/ref/rl.function.rlcontinuousgaussianactor.html

Stochastic Gaussian actor with a continuous action space for reinforcement learning agents - MATLAB

You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

www.mathworks.com

대략 여기까지 하면 645,117자가 되네요

한번 학습 시켜보고 다시 한번 보겠습니다.

열심히 크롤링 했다 생각했는데.....

생각보다 별로.....ㅠ

일단 한번 해봅시다.

이번에는 한 번에 작성해 보겠습니다.

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

BitsAndBytesConfig,

HfArgumentParser,

TrainingArguments,

pipeline,

logging,

Trainer,

DataCollatorForLanguageModeling

)

from peft import (

LoraConfig,

PeftModel,

prepare_model_for_kbit_training,

get_peft_model,

)

import os, torch

from datasets import load_dataset

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

device

base_model = "/kaggle/input/llama-3/transformers/8b-hf/1"

new_model = "llama-3-8b-chat-matlab"

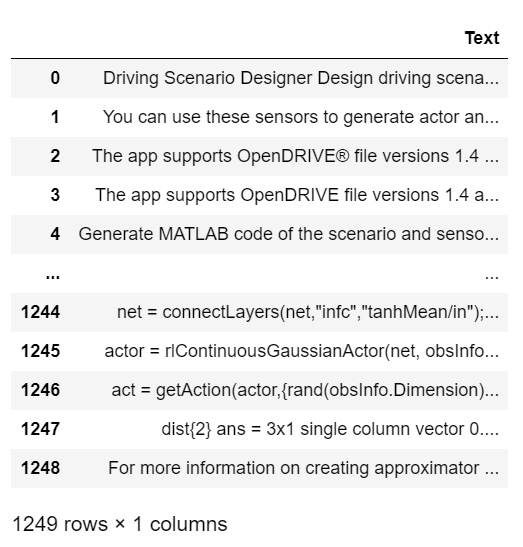

dataset = load_dataset('csv', data_files='/kaggle/input/clean-long-matlabdata/cleaned_long_matlab_data.csv')

base_model = "/kaggle/input/llama-3/transformers/8b-hf/1"

new_model = "llama-3-8b-chat-matlab"

dataset = load_dataset('csv', data_files='/kaggle/input/clean-long-matlabdata/cleaned_long_matlab_data.csv')

torch_dtype = torch.float16

attn_implementation = "eager"

# QLoRA config

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch_dtype,

bnb_4bit_use_double_quant=True,

)

# Load model

model = AutoModelForCausalLM.from_pretrained(

base_model,

quantization_config=bnb_config,

device_map="auto",

attn_implementation=attn_implementation

)

# Load tokenizer

tokenizer = AutoTokenizer.from_pretrained(base_model)

tokenizer.pad_token = tokenizer.eos_token

# LoRA config

peft_config = LoraConfig(

r=32,

lora_alpha=32,

lora_dropout=0.2,

bias="none",

task_type="CAUSAL_LM",

target_modules=['up_proj', 'down_proj', 'gate_proj', 'k_proj', 'q_proj', 'v_proj', 'o_proj']

)

model = get_peft_model(model, peft_config)

def tokenize_function(examples):

return tokenizer(examples['Text'], padding='max_length', truncation=True)

tokenized_dataset = dataset.map(tokenize_function, batched=True)

model = get_peft_model(model, peft_config)

training_args = TrainingArguments(

output_dir= new_model,

overwrite_output_dir=True,

num_train_epochs=1,

optim="paged_adamw_32bit",

per_device_train_batch_size=2,

gradient_accumulation_steps=2,

save_steps=10_000,

save_total_limit=2,

prediction_loss_only=True,

evaluation_strategy="steps",

eval_steps=0.2,

logging_steps=1,

warmup_steps=10,

logging_strategy="steps",

learning_rate=2e-4,

fp16=False,

bf16=False,

group_by_length=True,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_dataset['train'],

data_collator=DataCollatorForLanguageModeling(

tokenizer=tokenizer,

mlm=False,

),

)

os.environ["WANDB_DISABLED"] = "true"

trainer.train()GPU크기에서 자꾸 오류가 나네요.......

T4 두개로 진행해보겠습니다.

이것도 오류가............

흐..........

GPU용량이 터지네요....

일단 오늘은 여기까지 하고

내일 다시 머리 잘 돌아가는 상태에서 해보겠습니다.

'인공지능 > 자연어 처리' 카테고리의 다른 글

| python 실습 - Huggingface SmolLM fine-tuning 하기 with LoRA - matlab data (0) | 2024.07.25 |

|---|---|

| SLM Phi-3 활용해서 Parameter efficient fine-tuning 진행하기 (1) | 2024.07.23 |

| LLaMa3 LoRA를 통해 parameter efficient fine-tuning 진행하기 1(Matlab 도메인) - python (0) | 2024.07.22 |

| 자연어 처리 Python 실습 - Parameter Efficient Fine tuning (3) | 2024.07.22 |

| 자연어 처리 python 실습 - LLaMa instruction Tuning (1) | 2024.07.21 |