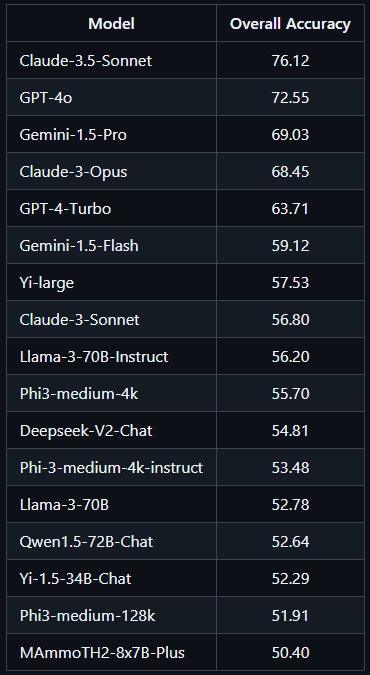

https://arxiv.org/abs/2411.10979 VidComposition: Can MLLMs Analyze Compositions in Compiled Videos?The advancement of Multimodal Large Language Models (MLLMs) has enabled significant progress in multimodal understanding, expanding their capacity to analyze video content. However, existing evaluation benchmarks for MLLMs primarily focus on abstract videoarxiv.org AbstractMultimodal LLM(MLLMs)는 상당..