728x90

728x90

from fastapi import FastAPI

from peft import PeftModel, PeftConfig

from transformers import AutoModelForCausalLM,AutoTokenizer

# 기본 모델 가져오기

base_model = AutoModelForCausalLM.from_pretrained("SmolLM1.7B")

# 로컬 경로로 PeftModel 불러오기

model = PeftModel.from_pretrained(base_model,"saved_model")

# 로컬 경로로 tokenizer 불러오기

tokenizer = AutoTokenizer.from_pretrained("saved_model")

def get_answer(prompt):

#prompt = "what is a road runner?"

inputs = tokenizer(prompt, return_tensors='pt')

generate_ids = model.generate(inputs.input_ids, max_length = inputs.input_ids.shape[1]+15)# 입력 길이에 +n만큼 출력한다.

output = tokenizer.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]

output = output[len(prompt):]

return output

# 오브제긑 생성

app = FastAPI()

@app.get('/')

def root():

return{'message':'Hello World'}

@app.get('/chat_test')

def test(user_message):

return{'message': get_answer(user_message)}

@app.post('/chat')

def test(param : dict={}):

user_message=param.get('user_message', ' ')

return {'message': get_answer(user_message)}uvicorn main:app --reload

import streamlit as st

st.write('hello!')

streamlit run st.py

이메일 한번 작성해주면 이렇게 바로 로컬 주소로 열어주네요

hello도 떠있습니다.

한번 꾸며볼게요!

import streamlit as st

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - matworks data")

st.caption("created by YJH")

st.write('hello!')

h가 빠졌네요 ㅎ.ㅎ.....

일단 깔끔하게 꾸몄습니다.

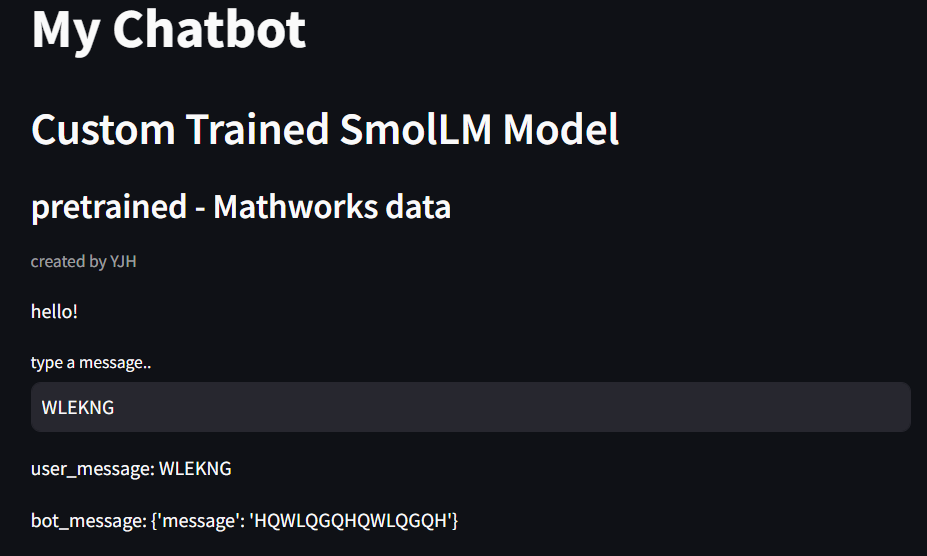

이제 인풋을 만들어야죠

import streamlit as st

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('hello!')

st.text_input("type a message..", key = "user_message")

message = st.session_state.user_message

st.write(f"user_message: {message}")

잘 된것을 확인할 수 있습니다.

import streamlit as st

class llama_web():

def __init__(self):

pass

def window(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('hello!')

st.text_input("type a message..", key = "user_message")

message = st.session_state.user_message

st.write(f"user_message: {message}")

if __name__ == '__main__':

web = llama_web()

web.window()코드 살짝 정리했습니다.

import streamlit as st

import requests

import json

class llama_web():

def __init__(self):

self.URL = "http://127.0.0.1:800/chat"

def get_answer(self,message):

param={'user_message': message}

resp = requests.post(self.URL,json=param)

output = json.loads(resp.content)

return output

def window(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('hello!')

st.text_input("type a message..", key = "user_message")

#message = st.session_state.user_message

#st.write(f"user_message: {message}")

if user_message := st.session_state['user_message']:

output = self.get_answer(user_message)

st.write(f"user_message: {user_message}")

st.write(f"bot_message: {output}")

if __name__ == '__main__':

web = llama_web()

web.window()

또잉...?

아 오타였네요 ㅎㅎ...

주소가 800이 아니라 8000 입니다.

오 뭔가 뱉고 있습니다

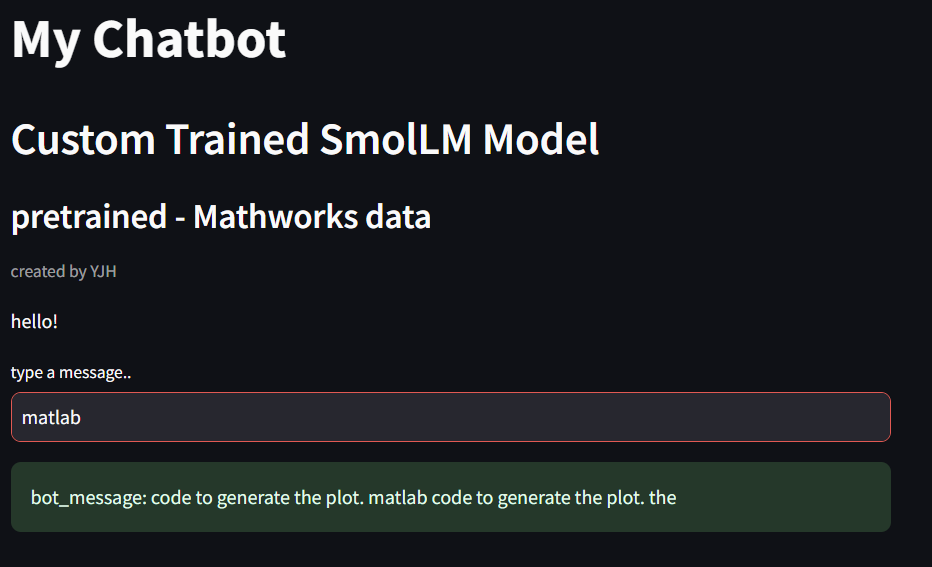

좀 더 깔끔하게 다듬어 봤습니다.

import streamlit as st

import requests

import json

class llama_web():

def __init__(self):

self.URL = "http://127.0.0.1:8000/chat"

def get_answer(self,message):

param={'user_message': message}

resp = requests.post(self.URL,json=param)

output = json.loads(resp.content)['message']

return output

def window(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('hello!')

st.text_input("type a message..", key = "user_message")

#message = st.session_state.user_message

#st.write(f"user_message: {message}")

if user_message := st.session_state['user_message']:

output = self.get_answer(user_message)

#st.write(f"user_message: {user_message}")

#st.write(f"bot_message: {output}")

st.success(f"bot_message: {output}")# 초록 박스 안에

if __name__ == '__main__':

web = llama_web()

web.window()

이제 진짜 챗봇처럼 기록도 남아있어야겠죠?

import streamlit as st

import requests

import json

import pandas as pd

class llama_web():

def __init__(self):

self.URL = "http://127.0.0.1:8000/chat"

def get_answer(self,message):

param={'user_message': message}

resp = requests.post(self.URL,json=param)

output = json.loads(resp.content)['message']

return output

def update_log(self, user_message, bot_message):

if 'chat_log' not in st.session_state:

st.session_state.chat_log = {'user_message': [], 'bot_message':[]} #새로 만들어 준 것이다.

st.session_state.chat_log['user_message'] .append(user_message)

st.session_state.chat_log['bot_message'] .append(bot_message)

return st.session_state.chat_log

def window(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('hello!')

st.text_input("type a message..", key = "user_message")

#message = st.session_state.user_message

#st.write(f"user_message: {message}")

if user_message := st.session_state['user_message']:

output = self.get_answer(user_message)

#st.write(f"user_message: {user_message}")

#st.write(f"bot_message: {output}")

st.success(f"bot_message: {output}")# 초록 박스 안에

chat_log = self.update_log(user_message, output)

st.write(pd.DataFrame(chat_log))

if __name__ == '__main__':

web = llama_web()

web.window()

그런데 이렇게 되면 뭔가 챗봇 느낌은 아니다!

챗봇스러움을 늘려봅시다.

import streamlit as st

from streamlit_chat import message

import requests

import json

import pandas as pd

class llama_web():

def __init__(self):

self.URL = "http://127.0.0.1:8000/chat"

def get_answer(self,message):

param={'user_message': message}

resp = requests.post(self.URL,json=param)

output = json.loads(resp.content)['message']

return output

def update_log(self, user_message, bot_message):

if 'chat_log' not in st.session_state:

st.session_state.chat_log = {'user_message': [], 'bot_message':[]} #새로 만들어 준 것이다.

st.session_state.chat_log['user_message'] .append(user_message)

st.session_state.chat_log['bot_message'] .append(bot_message)

return st.session_state.chat_log

def window(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('hello!')

st.text_input("type a message..", key = "user_message")

#message = st.session_state.user_message

#st.write(f"user_message: {message}")

if user_message := st.session_state['user_message']:

output = self.get_answer(user_message)

#st.write(f"user_message: {user_message}")

#st.write(f"bot_message: {output}")

st.success(f"bot_message: {output}")# 초록 박스 안에

chat_log = self.update_log(user_message, output)

st.write(pd.DataFrame(chat_log))

def chat(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('한번 써 보세요!')

message("Hello. I'm matworks user",is_user=True)

message("Hello. I'm matworks chatbot",is_user=False)

if __name__ == '__main__':

web = llama_web()

web.chat()

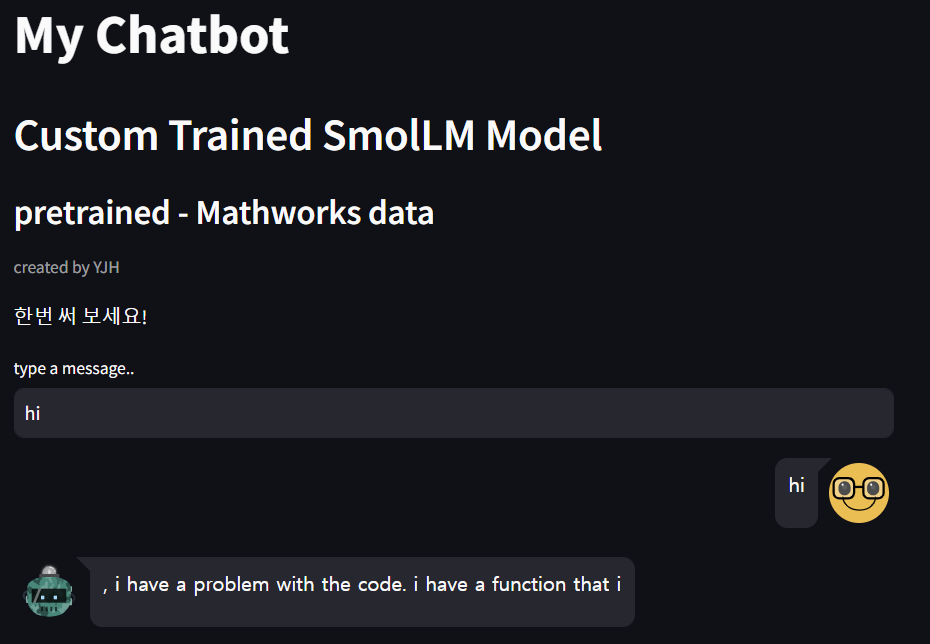

귀엽다!

이제 입력을 만들어 줍니다.

import streamlit as st

from streamlit_chat import message

import requests

import json

import pandas as pd

class llama_web():

def __init__(self):

self.URL = "http://127.0.0.1:8000/chat"

def get_answer(self,message):

param={'user_message': message}

resp = requests.post(self.URL,json=param)

output = json.loads(resp.content)['message']

return output

def update_log(self, user_message, bot_message):

if 'chat_log' not in st.session_state:

st.session_state.chat_log = {'user_message': [], 'bot_message':[]} #새로 만들어 준 것이다.

st.session_state.chat_log['user_message'] .append(user_message)

st.session_state.chat_log['bot_message'] .append(bot_message)

return st.session_state.chat_log

def window(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('hello!')

st.text_input("type a message..", key = "user_message")

#message = st.session_state.user_message

#st.write(f"user_message: {message}")

if user_message := st.session_state['user_message']:

output = self.get_answer(user_message)

#st.write(f"user_message: {user_message}")

#st.write(f"bot_message: {output}")

st.success(f"bot_message: {output}")# 초록 박스 안에

chat_log = self.update_log(user_message, output)

st.write(pd.DataFrame(chat_log))

def chat(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('한번 써 보세요!')

#message("Hello. I'm matworks user",is_user=True)

#message("Hello. I'm matworks chatbot",is_user=False)

st.text_input("type a message..", key = "user_message")

if user_message := st.session_state['user_message']:

output = self.get_answer(user_message)

message(user_message,is_user= True)

message(output)

if __name__ == '__main__':

web = llama_web()

web.chat()

이제 완전히 대화하는 것 처럼 나오는데 저장이 안되네요

아까 만든 저장하는 코드를 재활용 합시다

import streamlit as st

from streamlit_chat import message

import requests

import json

import pandas as pd

class llama_web():

def __init__(self):

self.URL = "http://127.0.0.1:8000/chat"

def get_answer(self,message):

param={'user_message': message}

resp = requests.post(self.URL,json=param)

output = json.loads(resp.content)['message']

return output

def update_log(self, user_message, bot_message):

if 'chat_log' not in st.session_state:

st.session_state.chat_log = {'user_message': [], 'bot_message':[]} #새로 만들어 준 것이다.

st.session_state.chat_log['user_message'] .append(user_message)

st.session_state.chat_log['bot_message'] .append(bot_message)

return st.session_state.chat_log

def window(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('hello!')

st.text_input("type a message..", key = "user_message")

#message = st.session_state.user_message

#st.write(f"user_message: {message}")

if user_message := st.session_state['user_message']:

output = self.get_answer(user_message)

#st.write(f"user_message: {user_message}")

#st.write(f"bot_message: {output}")

st.success(f"bot_message: {output}")# 초록 박스 안에

chat_log = self.update_log(user_message, output)

st.write(pd.DataFrame(chat_log))

def chat(self):

st.title("My Chatbot")

st.header("Custom Trained SmolLM Model")

st.subheader("pretrained - Mathworks data")

st.caption("created by YJH")

st.write('한번 써 보세요!')

#message("Hello. I'm matworks user",is_user=True)

#message("Hello. I'm matworks chatbot",is_user=False)

st.text_input("type a message..", key = "user_message")

if user_message := st.session_state['user_message']:

output = self.get_answer(user_message)

#message(user_message,is_user= True)

#message(output)

chat_log = self.update_log(user_message,output)

#오래된 것은 아래에, 최신은 위로 가야 한다.

bot_message = chat_log["bot_message"][::-1]# 순서 뒤집기

user_message = chat_log["user_message"][::-1]# 순서 뒤집기

for idx,(bot,user) in enumerate(zip(bot_message,user_message)):

message(bot,key=f"{idx}_bot")

message(user,key=f"{idx}_user",is_user = True)

if __name__ == '__main__':

web = llama_web()

web.chat()

잘 작동하네요 ㅎㅎ...

이제 모델을 좀 더 잘 만들어야 겠네요 ㅎㅎㅎㅎㅎ........

728x90

'인공지능 > 자연어 처리' 카테고리의 다른 글

| 자연어 처리 복습 2 - NLP Task, token, 데이터 전처리 (0) | 2024.08.27 |

|---|---|

| 자연어 처리 복습 1 - transformer, token, attention (0) | 2024.08.26 |

| 학습한 모델 시각화 하여 보기 깔끔하게 하기! - 모델 서빙하기 1 (0) | 2024.08.13 |

| python 실습 - Huggingface SmolLM fine-tuning 하기 with LoRA - matlab data (0) | 2024.07.25 |

| SLM Phi-3 활용해서 Parameter efficient fine-tuning 진행하기 (1) | 2024.07.23 |