한국어는 교착어로 조사나 어미가 발달되어 있기 때문에 띄어쓰기 단위인 어절로 토큰화를 진행하면 의미적인 훼손이 일어난다.

형태소를 추출하여 분리하는 작업이 선행되어야 의미를 이해하는데 도움이 된다.

품사 태깅(POS)도 중요하다.

ex) fly = 날다(동사), 파리(명사)

오타와, 띄어쓰기가 없어도 형태소 분석기를 사용하면 잘 분류하나, 종류마다 성능이 다 다르다.

정제 과정은 아래에서 확인 가능합니다.

2024.03.05 - [인공지능/자연어 처리] - 한국어 데이터 전처리 - 한국어 코퍼스 전처리 Python 실습

한국어 데이터 전처리 - 한국어 코퍼스 전처리 Python 실습

한국어 코퍼스 전처리 실습 Introduction Chapter 2. 한국어 데이터 전처리 강의의 한국어 코퍼스 전처리 실습 강의입니다. 일반적인 한국어 코퍼스 처리 과정인 (1) 코퍼스 수집, (2) 정제(Cleaning) 및 정

yoonschallenge.tistory.com

단어의 형태는 동일해도 의미가 다를 수 있고(중의성, 동형어, 다의어, 동음이의어), 다른 형태가 동일한 의미를 가질 수 있다.

feature extraction - 데이터에서 특징을 찾아 벡터로 변환하는 작업 == embedding

특정 정보를 더하고, 빼서 기존의 단어를 찾아갈 수 있다.

두 벡터의 값을 통해 유사도를 구할 수 있다. - cos 유사도

임베딩의 통계적 가설

1. 단어 출현 빈도

2. 주변 단어와의 관계

3. 단어 등장 순서

출현 빈도에 기반한 임베딩 - 원 핫 인코딩, bag of words, TF-IDF

원 핫 인코딩 - sparse문제로 컴퓨터 성능 저하, 유사도 표현 불가

BOW = 출현 빈도 - 아직도 희소 문제와 순서 반영 불가 문제

TF-IDF = 단어 빈도 (TF)와 역 문서 빈도(IDF) - 맥락을 반영하지 못하고, 규모가 크면 높은 계산 복잡도

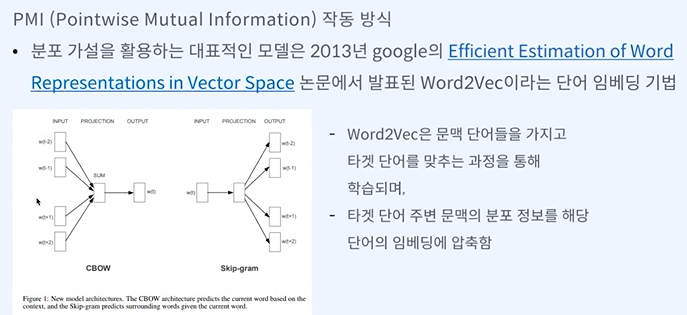

분포가설 - 비슷한 문맥 내에서 등장하는 단어는 비슷한 의미를 가진다. -> 즉, Window 크기에 따라 정의되는 문맥의 의미를 이용해 단어를 백터로 표현 -> 분산 표현 (distributed representation)

word2Vec - 주변 단어들로 학습하여 전체 정보가 전달되지 않는다.

CBOW : 주변 단어를 통해 중심 단어 유추

Skip-gram : 중심 단어를 통해 주변 단어 유추

CBOW보다 Skip-gram의 학습 횟수가 더 많아서 정교한 임베딩이 가능하다.

그래도 아직 OOV나 희소한 단어들의 임베딩이 어렵다.

고빈도 단어들은 학습 횟수를 줄인다. - Subsampling frequent words

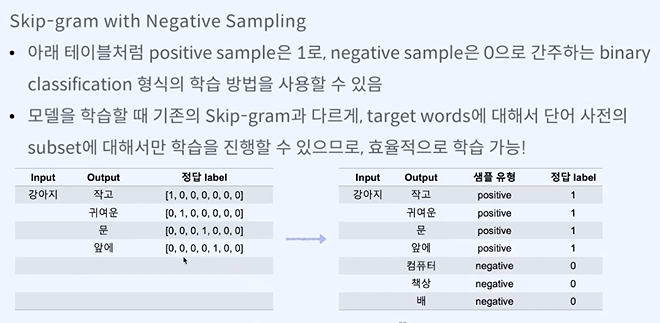

negative sampling

FastText

GloVe

Certainly! Let's break down these three popular word embedding techniques—Word2Vec, GloVe, and FastText—in a simple and smooth way.

1. Word2Vec

What it is: Word2Vec is a method developed by Google that transforms words into numerical vectors. These vectors represent words in a continuous vector space, where the distance between vectors indicates the similarity between words.

How it works: Word2Vec uses a neural network to learn the relationships between words based on their context. There are two main approaches:

- CBOW (Continuous Bag of Words): Predicts a word based on the context (surrounding words).

- Skip-Gram: Predicts the surrounding words given a specific word.

Why it's useful: Word2Vec captures semantic relationships, meaning that words with similar meanings have similar vector representations. For example, the vector for "king" might be close to the vector for "queen."

Key point: It only considers individual words, not subword information (like prefixes or suffixes).

2. GloVe (Global Vectors for Word Representation)

What it is: GloVe is another word embedding technique developed by researchers at Stanford. Like Word2Vec, it turns words into vectors, but it approaches the problem differently.

How it works: GloVe is based on matrix factorization techniques. It looks at word co-occurrence statistics in a large corpus (a collection of texts). Essentially, it counts how often pairs of words appear together and uses this information to build a model.

Why it's useful: GloVe captures both local and global context in its vectors, meaning it considers the entire corpus, not just the immediate context around a word. This helps create more meaningful word representations.

Key point: GloVe doesn't learn from word sequences directly, but from co-occurrence probabilities, which helps it capture broader context.

3. FastText

What it is: FastText, developed by Facebook's AI Research lab, is similar to Word2Vec but with an important enhancement: it considers subword information (like prefixes, suffixes, etc.).

How it works: FastText breaks words down into smaller parts called "n-grams" (which could be individual characters or groups of characters). This means that words are represented by the sum of the vectors of their n-grams. This helps in capturing the meaning of even rare words or misspellings, which Word2Vec and GloVe might miss.

Why it's useful: FastText can create vectors for words that weren’t seen during training by combining the vectors of their n-grams. This makes it very powerful for languages with lots of morphological variations or where words can be formed by adding prefixes, suffixes, etc.

Key point: It handles out-of-vocabulary words (words not seen during training) better because of its subword modeling.

Summary of Differences:

- Word2Vec learns vectors by predicting context and works well for known words but struggles with unseen words.

- GloVe uses global co-occurrence statistics to learn vectors, capturing broader context but still struggles with unseen words.

- FastText improves upon Word2Vec by considering subword information, making it better at handling unseen words or those with complex structures.

Why These Are Important:

These embeddings (Word2Vec, GloVe, and FastText) are foundational in many NLP (Natural Language Processing) tasks, like sentiment analysis, machine translation, and text classification. They help machines understand and process human language by converting words into a form (vectors) that can be analyzed mathematically.

Understanding these embeddings is a key step toward working with AI and NLP systems.

Great question! Let's dive into the differences between FastText and BPE (Byte-Pair Encoding). Both methods involve breaking down words into smaller units, but they are used differently and serve different purposes in natural language processing.

1. FastText

How it works: FastText represents words using n-grams (subword units like characters or groups of characters). For example, the word "playing" might be broken down into n-grams like "pla," "lay," "ayi," etc. FastText then represents the word as the sum of these n-gram vectors.

Purpose: The main goal of FastText is to improve word embeddings, particularly for rare words, by using subword information. This is very useful for languages with rich morphology, where words can have many variations.

Key Characteristics:

- Subword Modeling: FastText's n-grams help it capture information about word parts, allowing it to generate embeddings for words not seen during training.

- Continuous Vector Representations: The n-grams are combined to form a continuous vector for each word.

- Used for: Word embeddings, improving the handling of out-of-vocabulary words in tasks like text classification, translation, etc.

2. BPE (Byte-Pair Encoding)

How it works: BPE is a tokenization algorithm originally used for data compression, but it has been adapted for NLP, particularly in models like GPT and BERT. BPE works by iteratively merging the most frequent pairs of characters (or character sequences) into a single unit (subword). For example, starting with "l" and "o" as separate tokens, BPE might first merge "l" and "o" into "lo" if that combination appears frequently, and continue merging until no more frequent pairs are left.

Purpose: BPE aims to balance between using whole words as tokens and breaking them down into characters. It allows for efficient tokenization, handling rare words and subword units effectively by representing them as combinations of more frequent units.

Key Characteristics:

- Subword Units: BPE creates a variable-length token vocabulary where frequent words or subwords are represented as a single unit, while rare or unseen words are split into subwords.

- Tokenization for Text: BPE is primarily used in the tokenization step before feeding text into models like transformers (e.g., GPT, BERT).

- Used for: Tokenizing text in NLP tasks, especially for models that need to handle large vocabularies with many rare or unseen words.

Comparison: FastText vs. BPE

- Purpose:

- FastText is designed to create word embeddings that capture subword information, helping to model words, especially rare or unseen ones, in a continuous vector space.

- BPE is a tokenization technique that breaks down text into subword units for input into models. It creates a vocabulary that can efficiently represent both common and rare words.

- How They Handle Subwords:

- FastText uses fixed-length n-grams to create word embeddings. It focuses on representing words as vectors based on their subword components.

- BPE dynamically creates subword units based on the frequency of character pairs. It doesn't create embeddings but instead creates a tokenization scheme that models the input text effectively.

- Application:

- FastText is used to improve the quality of word embeddings, often in downstream tasks like classification or translation.

- BPE is used to tokenize text before feeding it into models, particularly in transformer-based architectures, to handle words not in the vocabulary and compress the input space.

Summary:

- FastText is about improving word embeddings by using subword information (n-grams), making it easier for the model to understand and work with words, especially those that are rare or morphologically complex.

- BPE is a tokenization method that breaks down text into subword units by merging frequent pairs of characters or subwords, optimizing how text is fed into models, particularly those based on transformers.

Both approaches leverage subwords, but for different ends—FastText for embedding words and BPE for tokenizing text.

통계 기반 언어 모델 - Statistical Language Model, SLM

단어 순서 정보를 명시적으로 학습한다.

단어 n개가 주어졌을 때 이 단어들이 동시에 나올 확률

이렇게 하면 확률이 많이 줄어드므로 n-gram을 통해 제한하여 확률을 높이기도 한다.

여전히 sparse한 문제가 있다. 또한 새로운 조합은 계속 등장할 텐데 데이터가 없다면 그것에 대해서는 예측하지도 못한다.

n을 어떻게 정하냐에 대한 문제도 존재한다.

통계의 한계를 벗어나보자

백 오프 - 특정 n-gram내에 단어가 없다면 n을 줄여서 다시 확률을 확인하는 것

스무딩 - 모든 등장 빈도에 k만큼 더하여 최소 k의 확률을 가지게 된다.

딥러닝 기반 언어 모델

Masking - 양방향으로 학습하여 마스킹 된 단어 맞추기

NTP - 단방향으로 학습하여 다음 토큰 맞추기

'인공지능 > 자연어 처리' 카테고리의 다른 글

| 자연어 처리 복습 5 - 사전 학습, 전이 학습, 미세 조정 (4) | 2024.09.03 |

|---|---|

| 자연어 처리 복습 4 - seq2seq, ELMo, Transformer, GPT, BERT (0) | 2024.09.03 |

| 세미나 정리 8-29 (0) | 2024.08.29 |

| 자연어 처리 복습 2 - NLP Task, token, 데이터 전처리 (0) | 2024.08.27 |

| 자연어 처리 복습 1 - transformer, token, attention (0) | 2024.08.26 |